In a previous post, Build a Pro Deep Learning Workstation… for Half the Price, I shared every detail to buy parts and build a professional quality deep learning rig for nearly half the cost of pre-built rigs from companies like Lambda and Bizon. The post went viral on Reddit and in the weeks that followed Lambda reduced their 4-GPU workstation price around $1200.

This is a good start toward making deep learning more accessible, but if you’d rather spend $7000 instead of $11,250+, here’s how.

In the previous post I stated, “there is no perfect build,” but if there was a perfect build at the lowest cost, what would it be? That’s what I show here. Check out the previous post for component explanations, benchmarking, and additional options for this 4-GPU deep learning rig.

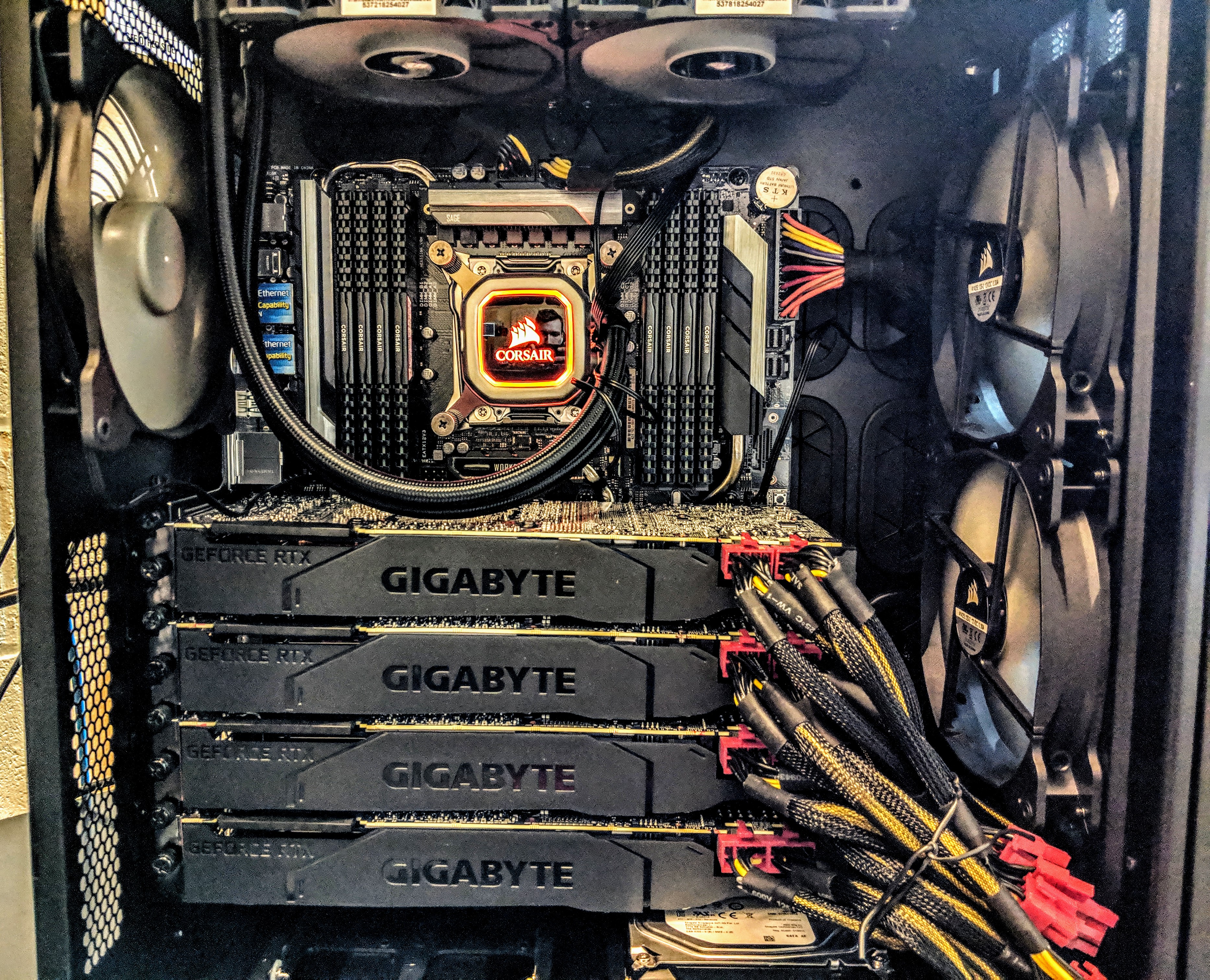

The goal of this post is to list exactly which parts to buy to build a state-of-the-art 4-GPU deep learning rig at the cheapest possible cost. Based on feedback that there were too many options in the previous post, I only list a best option for each component. I built three variations of multi-GPU rigs and the one I present here provides the best performance and reliability, without thermal throttling, for the cheapest cost.

I’ve included my receipt, showing the purchase of all the parts to build two of these rigs for $14000 ($7000 each).

Exactly which parts to buy

I ordered everything online via NeweggBusiness, but any vendor (e.g. Amazon) works. If you have a local MicroCenter store nearby, they often have cheap CPU prices if you purchase in a physical store. Don’t pay tax if you don’t need to (e.g. non-profit or education institutions). Both NeweggBusiness and Amazon accept tax-exemption documents. View my receipt for two of these 4-GPU rigs.

Here is each component:

4 RTX 2080 Ti GPUs (fastest GPU under $2000, likely for a few years)

Gigabyte RTX 2080 Ti Turbo 11GB, $1280 (04/16/2019)

Gigabyte RTX 2080 Ti Turbo 11GB, $1280 (04/16/2019)

These 2-PCI-slot blower-style RTX 2080 TI GPU also will work:

1. ASUS GeForce RTX 2080 Ti 11G Turbo Edition GD, $1209 (03/21/2019)

2. ZOTAC Gaming GeForce RTX 2080 Ti Blower 11GB, $1299 (03/21/2019)

Rosewill Hercules 1600W PSU (cheapest 1600W power supply)

Rosewill HERCULES 1600W Gold PSU, $209 (03/21/19)

Rosewill HERCULES 1600W Gold PSU, $209 (03/21/19)

1TB m.2 SSD (for ultrafast data loading in deep learning)

HP EX920 M.2 1TB PCIe NVMe NAND SSD, $150 (04/16/2019)

HP EX920 M.2 1TB PCIe NVMe NAND SSD, $150 (04/16/2019)

20-thread CPU (choose Intel over AMD for fast single thread speed)

Intel Core i9-9820X Skylake X 10-Core 3.3Ghz, $850 (03/21/19)

Intel Core i9-9820X Skylake X 10-Core 3.3Ghz, $850 (03/21/19)

X299 Motherboard (this motherboard fully supports 4 GPUs)

ASUS WS X299 SAGE LGA 2066 Intel X299, $492.26 (03/21/19)

ASUS WS X299 SAGE LGA 2066 Intel X299, $492.26 (03/21/19)

Case (high airflow keeps the GPUs cool)

Corsair Carbide Series Air 540 ATX Case, $115 (04/16/2019)

Corsair Carbide Series Air 540 ATX Case, $115 (04/16/2019)

3TB Hard-drive (for data and models you don’t access regularly)

Seagate BarraCuda ST3000DM008 3TB 7200 RPM, $75 (04/16/2019)

Seagate BarraCuda ST3000DM008 3TB 7200 RPM, $75 (04/16/2019)

128GB RAM (more RAM reduces the GPU to disk bottleneck)

8 sticks of CORSAIR Vengeance 16GB DRAM, $640 (04/16/2019)

8 sticks of CORSAIR Vengeance 16GB DRAM, $640 (04/16/2019)

CPU Cooler (this cooler doesn’t block case airflow)

Corsair Hydro Series H100i PRO Low Noise, $130 (04/16/2019)

Corsair Hydro Series H100i PRO Low Noise, $130 (04/16/2019)

Comparison with Lambda’s 4-GPU Workstation

This $7000 4-GPU rig is similar to Lambda’s $11,250 Lambda’s 4-GPU workstation. The only differences are (1) they use a 12-core CPU instead of a 10-core CPU and (2) they include a hot swap drive bay ($50).

Operating System and Performance

The operating system I’m using is Ubuntu Server 18.04 LTS. I’m using Cuda 10.1 with TensorFlow (installed using conda) and PyTorch (installed using conda). I’ve trained multiple of these machines with 100% GPU utilization on all four GPUs for over a month without any issues or thermal throttling.

Related Work

- Relative positioning of GPUs for optimal speed: [view this post]

- How to build a multi-GPU deep learning machine: [view this post]

- Benchmarking RTX 20-series GPUs for deep learning: [view this post]

Comments